Introduction

In the realm of artificial intelligence and machine learning, PyTorch has emerged as a powerful framework that is revolutionizing the way developers and researchers approach these fields.

Developed by Facebook’s AI Research lab (FAIR), PyTorch offers a dynamic, flexible, and intuitive platform for building and training neural networks.

This article delves into the features, benefits, and applications of shedding light on why it has become a preferred choice among machine learning enthusiasts.

Understanding PyTorch

is an open-source machine learning library that is primarily based on the Torch library.

What sets apart is its dynamic computation graph, which allows developers to modify the architecture of neural networks on the fly.

This dynamic approach offers greater flexibility and ease of debugging, making it an attractive option for both beginners and experts in the field.

Key Features and Benefits

Dynamic Computational Graph:

Unlike some other frameworks that rely on static computation graphs, employs dynamic computation graphs.

This means that nodes in the graph are created and modified as the code is executed.

This feature enables rapid experimentation and easier debugging, as you can inspect variables and make changes in real-time.

Tensor Computation:

PyTorch provides a robust tensor library, which forms the backbone of most machine learning computations.

Tensors are multidimensional arrays that can be manipulated efficiently, making complex mathematical operations associated with neural networks more manageable.

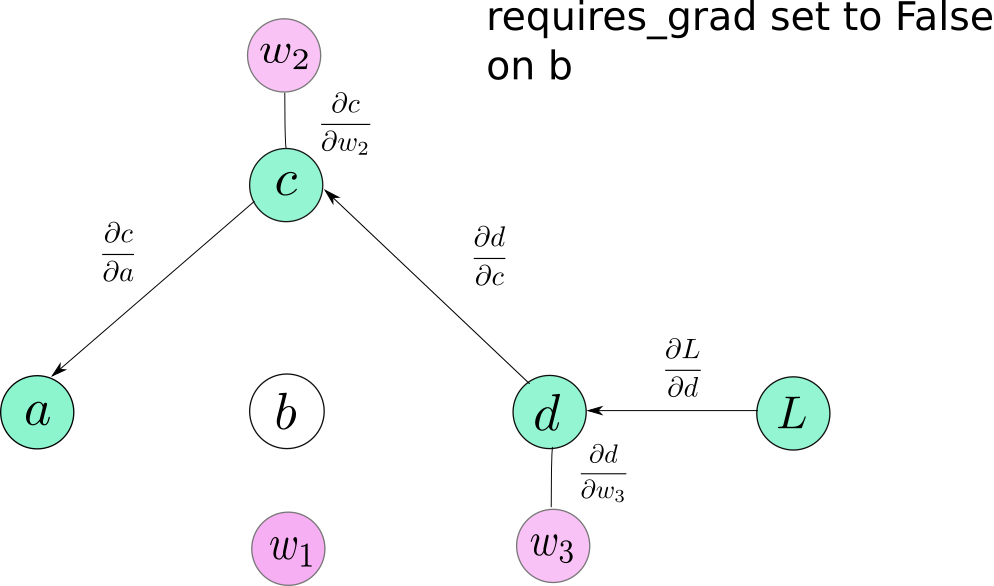

Autograd:

Automatic differentiation is a crucial component of training neural networks.

built-in autograd package automates the computation of gradients, allowing for efficient backpropagation.

This simplifies the process of fine-tuning models and optimizing their performance.

Neural Network Module:

offers the nn module, which provides pre-built layers, loss functions, and optimization algorithms.

This module accelerates the process of constructing neural networks, making it easier to piece together complex architectures.

Eager Execution:

Eager execution in facilitates an interactive and imperative programming style.

This means you can write code and see the results immediately, similar to standard Python programming.

This feature aids in understanding and debugging complex model structures.

Applications of PyTorch

Research and Experimentation:

The dynamic nature of makes it a preferred choice for researchers and academics who are constantly exploring novel neural network architectures and algorithms.

The ease of modifying models and observing their behavior in real-time accelerates the research process.

Natural Language Processing (NLP):

PyTorch has gained traction in the field of NLP due to its flexibility in handling sequence-based data.

Many state-of-the-art language models, such as BERT and GPT-2, have been implemented using PyTorch.

Computer Vision:

Image classification, object detection, and image generation are areas where PyTorch shines.

Its ability to handle complex image data and integrate with libraries like torchvision has led to numerous breakthroughs in computer vision.

Transfer Learning:

ecosystem includes pre-trained models that can be fine-tuned for specific tasks using transfer learning.

This is particularly beneficial when dealing with limited labeled data.

Dynamic Computational Graph:

The dynamic computation graph in PyTorch allows for a more intuitive and interactive development process.

While some other frameworks require you to define the entire network architecture upfront, lets you modify the network structure as you go.

This is particularly useful when experimenting with new ideas or adapting models to different tasks.

The graph also facilitates dynamic batching, which can be crucial when dealing with varying input sizes.

Eager Execution:

Eager execution, a hallmark of enables developers to execute operations immediately as they are called in the code.

This contrasts with static graph frameworks, where you compile the graph first and then execute it.

Eager execution encourages a more Pythonic coding style, making it easier to understand and debug the code.

However, static graph frameworks might offer slight performance advantages during training due to their ability to optimize computations.

Customizable Components:

modular design allows developers to create custom components easily.

You can define your own neural network layers, loss functions, and even custom gradients for specialized tasks.

This flexibility is particularly beneficial when working on innovative architectures or incorporating domain-specific requirements.

Community and Resources:

boasts an active and rapidly growing community.

This means there’s a wealth of tutorials, documentation, and online discussions available to help newcomers and experts alike.

The community contributes to the development of various tools and libraries that expand capabilities and simplify complex tasks.

Deployment:

While is often associated with research and experimentation, it’s also gaining ground in deployment.

integration with the ONNX (Open Neural Network Exchange) format allows models to be exported and used in other frameworks for inference.

Additionally, libraries like TorchScript enable users to convert PyTorch models into optimized representations for production environments.

Parallelism and GPU Acceleration:

PyTorch supports GPU acceleration out of the box, making it efficient for training and evaluating models.

Moreover, it offers tools for parallelizing computations across multiple GPUs, which is crucial when dealing with large datasets and complex architectures.

Distributed Learning:

Distributed training is essential for scaling up machine learning models.

PyTorch supports distributed data parallelism, allowing users to train models across multiple machines seamlessly.

This capability is vital for tackling more extensive datasets and training larger models in less time. https://lovishweb.com/

Research-Grade Models:

PyTorch’s dynamic nature makes it a natural choice for developing research-grade models.

This is especially important in fields like reinforcement learning, where experimentation is prevalent.

Research-driven projects often require rapid iteration and testing of different algorithmic approaches, and PyTorch’s design facilitates this process.

Frameworks Built on PyTorch:

Beyond the core library, PyTorch has paved the way for the development of other libraries and frameworks that extend its capabilities.

Notable examples include fastai for high-level training, Hugging Face Transformers for NLP models, and PyTorch Lightning for streamlined training and experiment management.

In summary, PyTorch’s dynamic graph, eager execution, customizable components, and strong community support make it a preferred tool for machine learning research, experimentation, and deployment.

Its flexibility, combined with a rich ecosystem of libraries and resources, has led to groundbreaking advancements in various AI domains.

Whether you’re a seasoned researcher or a newcomer to machine learning, PyTorch offers the tools and flexibility to bring your ideas to life.

Transfer Learning and Pre-Trained Models:

PyTorch facilitates transfer learning by providing access to a wide range of pre-trained models.

These models have been trained on massive datasets for various tasks.

By fine-tuning these models on your specific dataset, you can benefit from their learned features and achieve impressive results with less labeled data.

Graphical User Interfaces (GUIs) and Visualization:

PyTorch comes with tools that aid in visualizing neural networks, training progress, and model behavior.

Libraries like TensorBoardX enable you to visualize training curves, model architectures, and even feature activations, helping you gain insights into your models’ performance and behavior.

Hyperparameter Tuning:

The flexibility of PyTorch makes it well-suited for hyperparameter tuning.

You can easily experiment with different settings to find the optimal combination for your specific task.

Libraries like Optuna and Ray Tune can be integrated with PyTorch to automate and optimize the hyperparameter search process.

Privacy and Security:

PyTorch offers differential privacy features that enable training models while preserving the privacy of sensitive data.

This is particularly crucial when dealing with personal information in applications such as healthcare or finance.

Reinforcement Learning:

PyTorch is also a popular choice for developing reinforcement learning algorithms.

Its dynamic computation graph and support for custom environments make it well-suited for building and training agents in various domains, including robotics and gaming.

Quantization and Model Compression:

To deploy models on resource-constrained devices, model quantization and compression are essential.

PyTorch provides tools to quantize models, reducing memory and computation requirements while maintaining acceptable performance.

Interoperability with Other Libraries:

PyTorch can be seamlessly integrated with other libraries and frameworks.

For example, it can be combined with OpenCV for image processing tasks, scikit-learn for traditional machine learning, and even TensorFlow for specific use cases.

Education and Learning:

PyTorch’s intuitive interface and interactive nature make it an excellent tool for educational purposes.

It’s often used in university courses and online tutorials to teach fundamental concepts in machine learning and deep learning.

On-Device AI:

PyTorch’s ability to export models to formats like ONNX and convert them to TorchScript makes it suitable for deploying AI models on edge devices.

This is particularly important for applications requiring real-time inference, such as robotics and IoT devices.

Research Reproducibility:

PyTorch promotes research reproducibility by allowing you to share your code and models easily.

The dynamic computation graph ensures that others can reproduce your experiments precisely, helping to build credibility in the research community.

Contributions from the Community:

The open-source nature of PyTorch has led to a plethora of contributions from the community.

From new architectures and optimization techniques to cutting-edge research implementations, the community actively enriches PyTorch’s capabilities.

Conclusion

In conclusion, PyTorch’s adaptability, extensibility, and numerous features have made it a prominent player in the world of machine learning and artificial intelligence.

Its applications span across diverse domains, from research and academia to industry and deployment.

Whether you’re building advanced deep learning models, conducting research, or deploying AI on the edge, PyTorch provides the tools and resources to turn your ideas into reality.

In conclusion, PyTorch’s dynamic nature, tensor computation, autograd, neural network module, and eager execution make it a top choice for developing cutting-edge machine learning models.

From its role in academic research to its applications in various domains like NLP and computer vision, PyTorch is empowering the future of AI by putting powerful tools in the hands of developers and researchers.

As the field continues to evolve, PyTorch’s contribution is poised to reshape the landscape of machine learning and artificial intelligence.